|

I am a PhD Student at the

University of Texas at Austin. My PhD advisor is Ray Mooney.

|

News

-

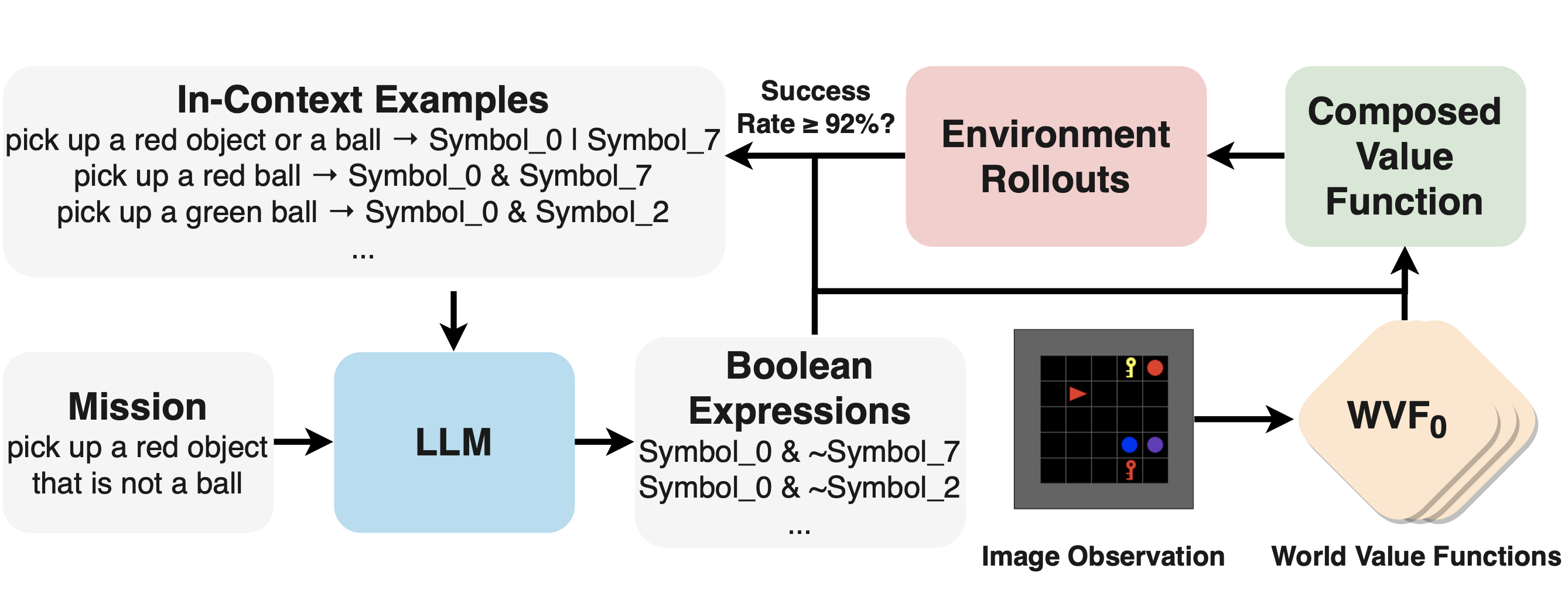

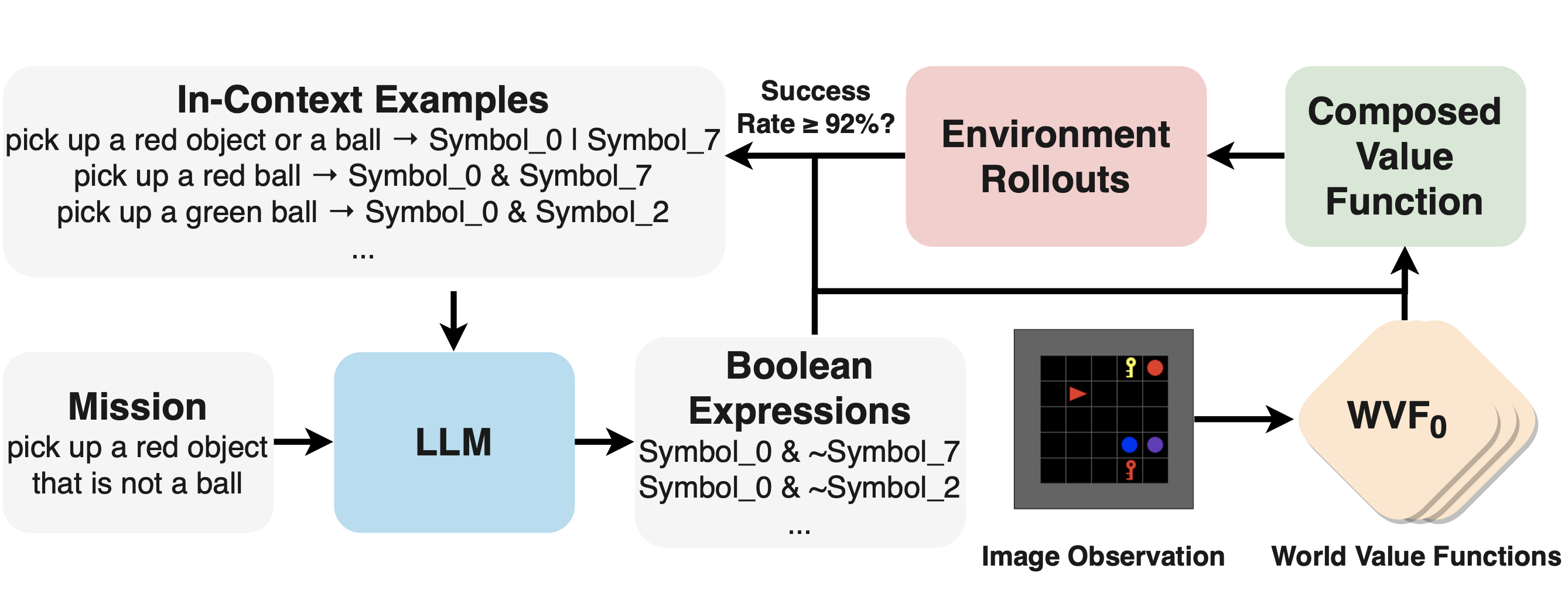

[May 2025]: Our TMLR 2024 paper, Compositional Instruction Following with Language Models and Reinforcement Learning, has been accepted to Reinforcement Learning Conference (RLC) 2025. This work introduces a novel in-context reinforcement learning approach that achieves systematic compositional generalization on a large suite of language-image RL tasks.

-

[November 2024]: Excited to share CaT-Bench! Our benchmark, CaT-Bench: Benchmarking Language Model Understanding of Causal and Temporal Dependencies in Plans, presented at EMNLP 2024, evaluates how language models handle step dependencies in procedure understanding, revealing challenges for state-of-the-art models. Check out CaT-Bench on HuggingFace and explore the code here.

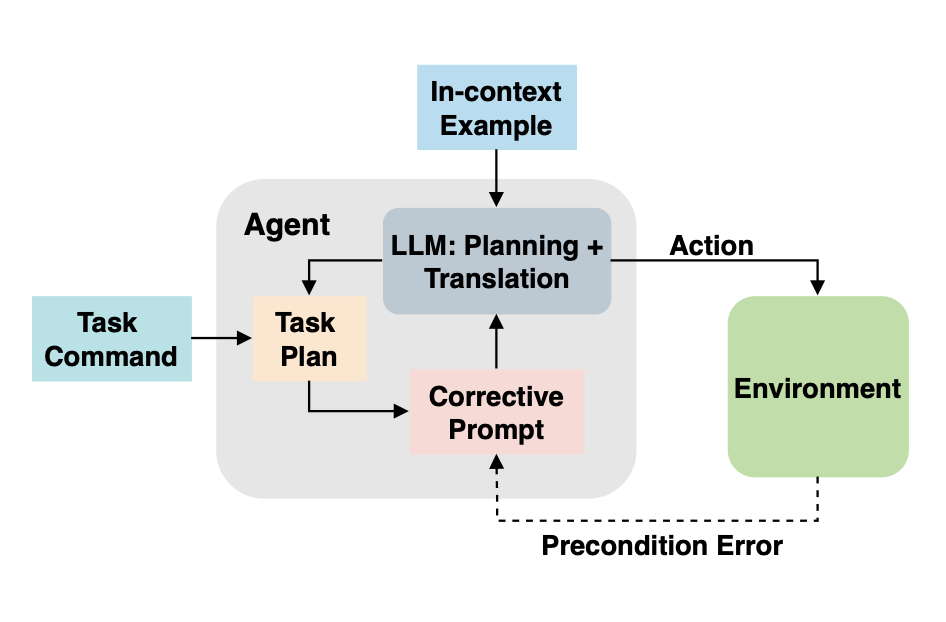

- [May 2024]: Announcing CAPE! In our ICRA 2024 paper, CAPE: Corrective Actions from Precondition Errors using Large Language Models, we introduced a method that empowers robots to autonomously make corrective actions by re-prompting large language models. Our implementation on the Spot robot demonstrates significant advancements in LLM-assisted robotic planning. Discover more on the CAPE project page and check out our code base.

Publications

2025

Compositional Instruction Following with Language Models and Reinforcement Learning

Vanya Cohen*, Geraud Nangue Tasse*, Nakul Gopalan, Steven James, Matthew Gombolay, Ray Mooney, Benjamin Rosman.

Reinforcement Learning Conference (RLC) 2025, August 2025.

[paper] [slides]

Introduces a novel semantic parsing technique that leverages policy rollouts within an environment and in-context learning. This approach enables solving the (currently) largest simultaneously learned suite of compositional language-RL tasks.

Vanya Cohen*, Geraud Nangue Tasse*, Nakul Gopalan, Steven James, Matthew Gombolay, Ray Mooney, Benjamin Rosman.

Reinforcement Learning Conference (RLC) 2025, August 2025.

[paper] [slides]

Introduces a novel semantic parsing technique that leverages policy rollouts within an environment and in-context learning. This approach enables solving the (currently) largest simultaneously learned suite of compositional language-RL tasks.

2024

Compositional Instruction Following with Language Models and Reinforcement Learning

Vanya Cohen*, Geraud Nangue Tasse*, Nakul Gopalan, Steven James, Matthew Gombolay, Ray Mooney, Benjamin Rosman.

TMLR 2024, December 2024.

[paper]

Introduces a novel semantic parsing technique that leverages policy rollouts within an environment and in-context learning. This approach enables solving the (currently) largest simultaneously learned suite of compositional language-RL tasks.

Vanya Cohen*, Geraud Nangue Tasse*, Nakul Gopalan, Steven James, Matthew Gombolay, Ray Mooney, Benjamin Rosman.

TMLR 2024, December 2024.

[paper]

Introduces a novel semantic parsing technique that leverages policy rollouts within an environment and in-context learning. This approach enables solving the (currently) largest simultaneously learned suite of compositional language-RL tasks.

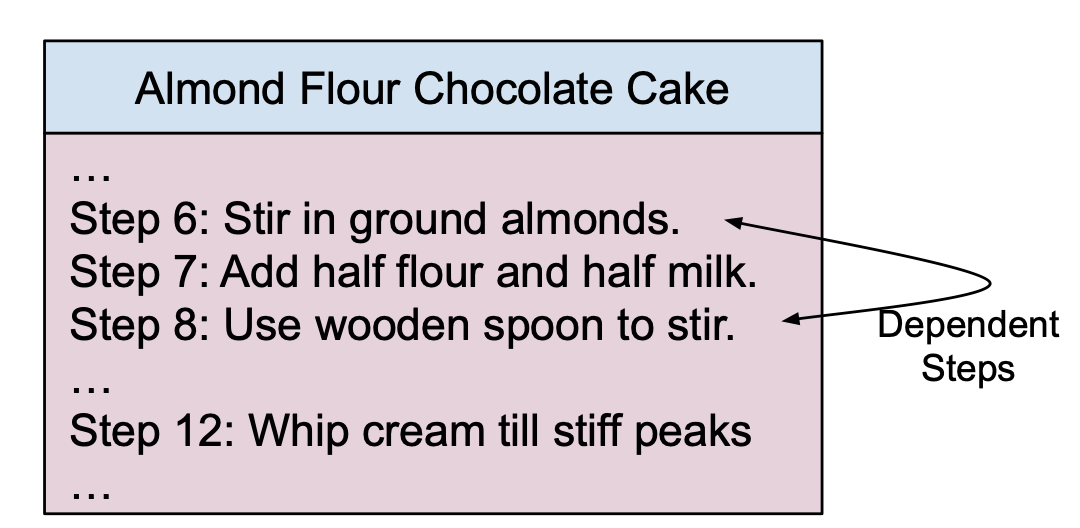

CaT-Bench: Benchmarking Language Model Understanding of Causal and Temporal Dependencies in Plans

Yash Kumar Lal*, Vanya Cohen*, Nathanael Chambers, Niranjan Balasubramanian, Raymond Mooney.

EMNLP 2024, November 2024.

[paper] [dataset] [code]

A benchmark that evaluates language models' ability to reason about step dependencies in task plans, using causal and temporal relations. We find SOTA LLMs perform poorly on this task despite its simplicity.

Yash Kumar Lal*, Vanya Cohen*, Nathanael Chambers, Niranjan Balasubramanian, Raymond Mooney.

EMNLP 2024, November 2024.

[paper] [dataset] [code]

A benchmark that evaluates language models' ability to reason about step dependencies in task plans, using causal and temporal relations. We find SOTA LLMs perform poorly on this task despite its simplicity.

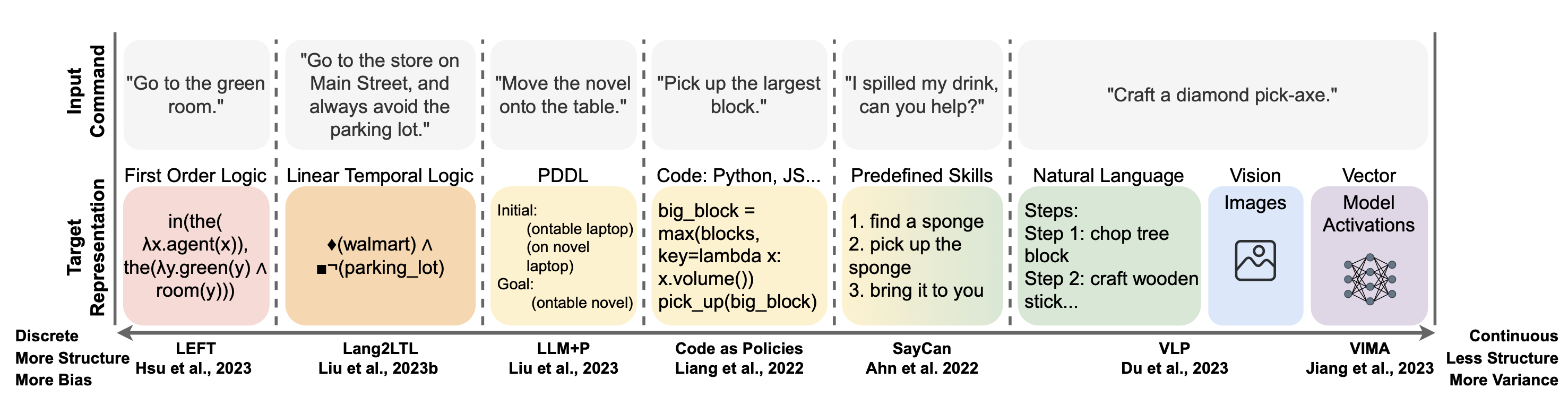

A Survey of Robotic Language Grounding: Tradeoffs Between Symbols and Embeddings

Vanya Cohen*, Jason Xinyu Liu*, Raymond Mooney*, Stefanie Tellex*, David Watkins*.

IJCAI 2024 Survey Track, August 2024.

[paper] [project page]

Robotic language grounding methods can be positioned along an axis that ranges from methods that map natural language to formal symbolic representations to those that map to high-dimensional vector spaces. The survey explores the trade-offs between interpretability, generalizability, and scalability.

Vanya Cohen*, Jason Xinyu Liu*, Raymond Mooney*, Stefanie Tellex*, David Watkins*.

IJCAI 2024 Survey Track, August 2024.

[paper] [project page]

Robotic language grounding methods can be positioned along an axis that ranges from methods that map natural language to formal symbolic representations to those that map to high-dimensional vector spaces. The survey explores the trade-offs between interpretability, generalizability, and scalability.

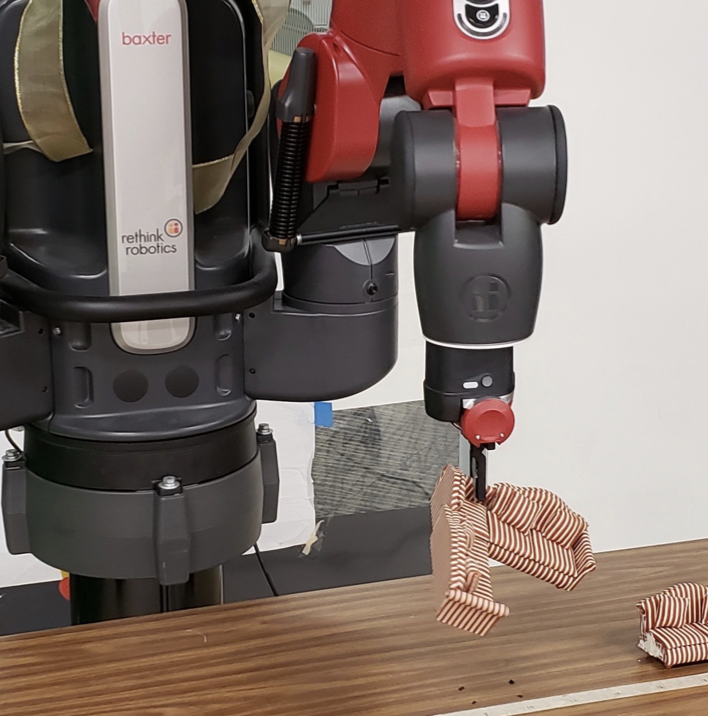

CAPE: Corrective Actions from Precondition Errors using Large Language Models

Shreyas Sundara Raman, Vanya Cohen, Ifrah Idrees, Eric Rosen, Ray Mooney, Stefanie Tellex, David Paulius.

ICRA 2024, May 2024.

[paper] [code] [project page]

CAPE resolves precondition errors in task planning for robotic agents by leveraging large language models. The method re-prompts LLMs using error feedback, allowing robots to make corrective actions in real-world environments.

Shreyas Sundara Raman, Vanya Cohen, Ifrah Idrees, Eric Rosen, Ray Mooney, Stefanie Tellex, David Paulius.

ICRA 2024, May 2024.

[paper] [code] [project page]

CAPE resolves precondition errors in task planning for robotic agents by leveraging large language models. The method re-prompts LLMs using error feedback, allowing robots to make corrective actions in real-world environments.

2023

Using Planning to Improve Semantic Parsing of Instructional Texts

Vanya Cohen, Raymond Mooney

Workshop on Natural Language Reasoning and Structured Explanations at ACL 2023, June 2023, Pages 47-58.

[paper]

A symbolic planning-based decoder is introduced to enhance semantic parsing in instructional texts. Leveraging large language models, it generates action sequences in a formal language for improved execution accuracy in few-shot settings. Evaluation demonstrates significant gains in parsing quality across two recipe instruction domains.

Vanya Cohen, Raymond Mooney

Workshop on Natural Language Reasoning and Structured Explanations at ACL 2023, June 2023, Pages 47-58.

[paper]

A symbolic planning-based decoder is introduced to enhance semantic parsing in instructional texts. Leveraging large language models, it generates action sequences in a formal language for improved execution accuracy in few-shot settings. Evaluation demonstrates significant gains in parsing quality across two recipe instruction domains.

2022

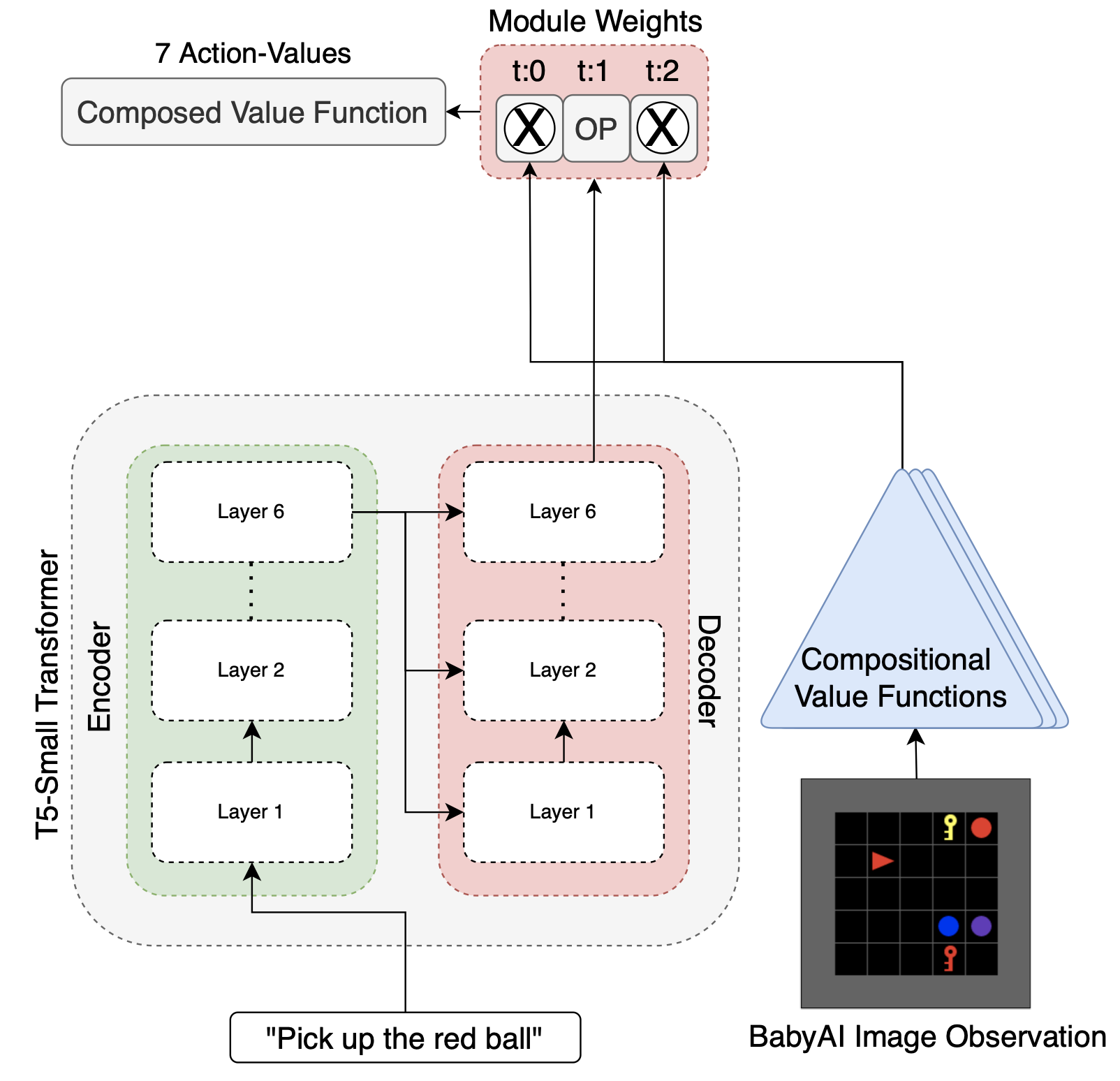

End-to-End Learning to Follow Language Instructions with Compositional Policies

Vanya Cohen*, Geraud Nangue Tasse*, Nakul Gopalan, Steven James, Ray Mooney, Benjamin Rosman

Workshop on Language and Robotics at CoRL 2022, December 2022.

[paper]

This paper introduces an end-to-end model combining large language models with pretrained compositional value functions to execute goal-reaching tasks specified in natural language. Evaluations in the BabyAI environment demonstrate the model's ability to generalize zero-shot to new combinations of task attributes.

Vanya Cohen*, Geraud Nangue Tasse*, Nakul Gopalan, Steven James, Ray Mooney, Benjamin Rosman

Workshop on Language and Robotics at CoRL 2022, December 2022.

[paper]

This paper introduces an end-to-end model combining large language models with pretrained compositional value functions to execute goal-reaching tasks specified in natural language. Evaluations in the BabyAI environment demonstrate the model's ability to generalize zero-shot to new combinations of task attributes.

2021

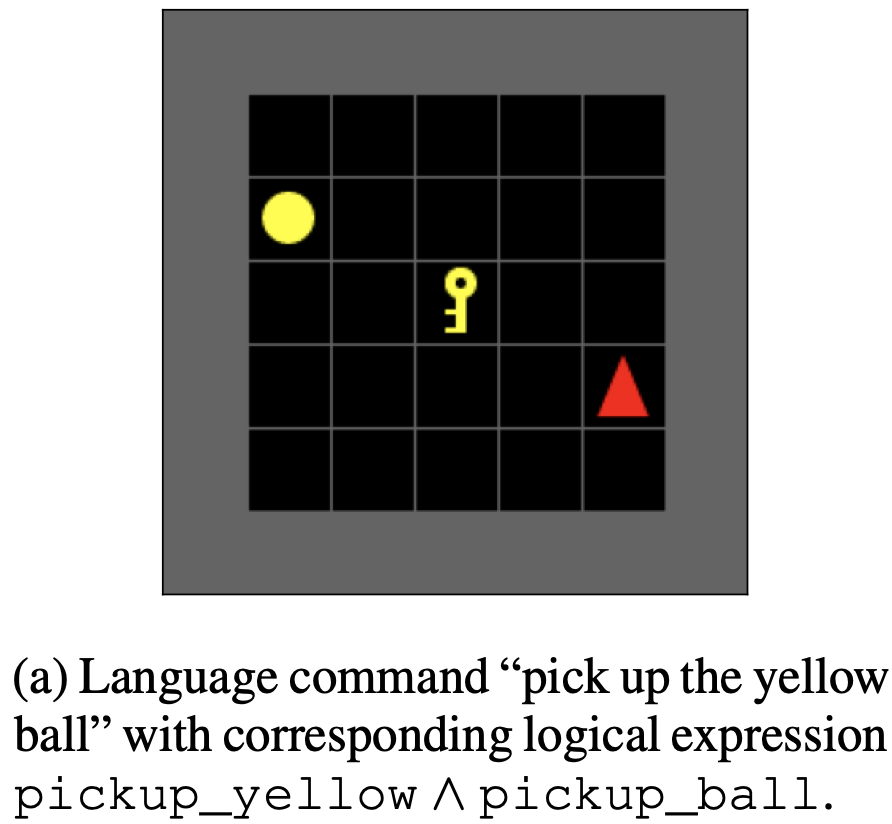

Learning to Follow Language Instructions with Compositional Policies

Vanya Cohen*, Geraud Nangue Tasse*, Nakul Gopalan, Steven James, Matthew Gombolay, Benjamin Rosman

AI-HRI Symposium at AAAI-FSS 2021, October 2021.

[paper]

A new framework leverages compositionality in value functions and language to execute natural language instructions in goal-reaching tasks. Using Boolean algebra to compose value functions, the approach reduces training steps by 86% for new tasks in the BabyAI domain, demonstrating efficient generalization.

Vanya Cohen*, Geraud Nangue Tasse*, Nakul Gopalan, Steven James, Matthew Gombolay, Benjamin Rosman

AI-HRI Symposium at AAAI-FSS 2021, October 2021.

[paper]

A new framework leverages compositionality in value functions and language to execute natural language instructions in goal-reaching tasks. Using Boolean algebra to compose value functions, the approach reduces training steps by 86% for new tasks in the BabyAI domain, demonstrating efficient generalization.

2020

Co-Organizer: NewInML @ NeurIPS 2020: A Workshop for Newcomers to Machine Learning

Zhen Xu, Vanya Cohen, Shruti Mishra, MingYu Lu

NewInML @ NeurIPS 2020, December 2020.

[workshop]

Sessions included talks by renowned speakers such as Dr. Samy Bengio, Prof. David Jensen, Prof. Anima Anandkumar, and Prof. Isabelle Guyon, as well as a panel discussion with prominent ML experts. The workshop aimed to guide new researchers through the process of publishing high-quality papers, with oral presentations and awards for standout contributions.

Zhen Xu, Vanya Cohen, Shruti Mishra, MingYu Lu

NewInML @ NeurIPS 2020, December 2020.

[workshop]

Sessions included talks by renowned speakers such as Dr. Samy Bengio, Prof. David Jensen, Prof. Anima Anandkumar, and Prof. Isabelle Guyon, as well as a panel discussion with prominent ML experts. The workshop aimed to guide new researchers through the process of publishing high-quality papers, with oral presentations and awards for standout contributions.

OpenGPT-2: Open Language Models and Implications of Generated Text

Vanya Cohen, Aaron Gokaslan

XRDS: Crossroads, The ACM Magazine for Students Fall 2020, September 2020.

[article]

Guest feature in ACM's XRDS Magazine. When OpenAI released its billion-parameter language model GPT-2, their attempts to withhold the model inspired two researchers to use open research practices to combat the misuse of machine learning.

Vanya Cohen, Aaron Gokaslan

XRDS: Crossroads, The ACM Magazine for Students Fall 2020, September 2020.

[article]

Guest feature in ACM's XRDS Magazine. When OpenAI released its billion-parameter language model GPT-2, their attempts to withhold the model inspired two researchers to use open research practices to combat the misuse of machine learning.

2019

OpenGPT-2: We Replicated GPT-2 Because You Can Too

Aaron Gokaslan*, Vanya Cohen*, Ellie Pavlick, Stefanie Tellex.

NeurIPS NewInML Workshop, December 2019.

[article] [code]

OpenGPT-2 is a replication of OpenAI's GPT-2 model, featuring one of the first publicly accessible language models. It utilized the OpenWebText dataset and helped pave the way for open-source LLMs.

Aaron Gokaslan*, Vanya Cohen*, Ellie Pavlick, Stefanie Tellex.

NeurIPS NewInML Workshop, December 2019.

[article] [code]

OpenGPT-2 is a replication of OpenAI's GPT-2 model, featuring one of the first publicly accessible language models. It utilized the OpenWebText dataset and helped pave the way for open-source LLMs.

Grounding Language Attributes to Objects using Bayesian Eigenobjects

Vanya Cohen*, Benjamin Burchfiel*, Thao Nguyen*, Nakul Gopalan, Stefanie Tellex, George Konidaris.

IROS 2019, November 2019.

[paper] [code] [project page]

This work presents a method to recognize 3D objects from natural language descriptions and depth images, leveraging unsupervised learning on 3D object meshes to generalize to novel viewpoints.

Vanya Cohen*, Benjamin Burchfiel*, Thao Nguyen*, Nakul Gopalan, Stefanie Tellex, George Konidaris.

IROS 2019, November 2019.

[paper] [code] [project page]

This work presents a method to recognize 3D objects from natural language descriptions and depth images, leveraging unsupervised learning on 3D object meshes to generalize to novel viewpoints.

OpenWebText: An Open Source Replication of OpenAI's WebText

Aaron Gokaslan*, Vanya Cohen*, Ellie Pavlick, Stefanie Tellex.

NeurIPS NewInML Workshop, May 2019.

[dataset]

OpenWebText replicates OpenAI's WebText dataset and has become a widely used open-source dataset for training language models, with over 4 million downloads.

Aaron Gokaslan*, Vanya Cohen*, Ellie Pavlick, Stefanie Tellex.

NeurIPS NewInML Workshop, May 2019.

[dataset]

OpenWebText replicates OpenAI's WebText dataset and has become a widely used open-source dataset for training language models, with over 4 million downloads.